Artificial intelligence and robotics spell massive changes to the world of work. These technologies can automate new tasks, and we are making more of them, faster, better and cheaper than ever before.

Surgery was early to the robotics party: Over a third of U.S. hospitals have at least one surgical robot. Such robots have been in widespread use by a growing variety of surgical disciplines, including urology and gynecology, for over a decade. That means the technology has been around for least two generations of surgeons and surgical staff.

I studied robotic surgery for over two years to understand how surgeons are adapting. I observed hundreds of robotic and “traditional” procedures at five hospitals and interviewed surgeons and surgical trainees at another 13 hospitals around the country. I found that robotic surgery disrupted approved approaches surgical training. Only a minority of residents found effective alternatives.

Like the surgeons I studied, we’re all going to have to adapt to AI and robotics. Old hands and new recruits will have to learn new ways to do their jobs, whether in construction, lawyering, retail, finance, warfare or childcare – no one is immune. How will we do this? And what will happen when we try?

A shift in surgery

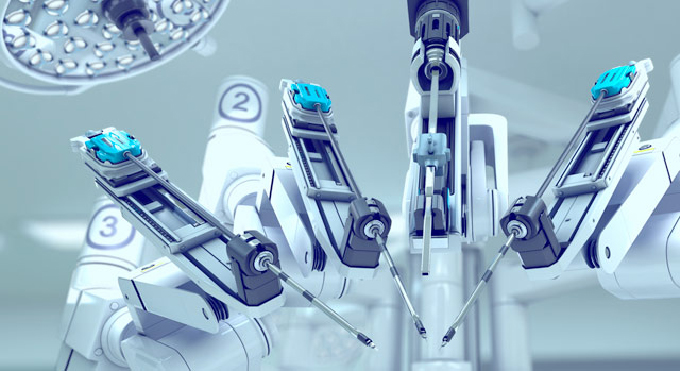

In my new paper, published January 8, I specifically focus on how surgical trainees, known as residents, learned to use the 800-pound gorilla: Intuitive Surgical’s da Vinci surgical system. This is a four-armed robot that holds sticklike surgical instruments, controlled by a surgeon sitting at a console 15 or so feet away from the patient.

Robotic surgery presented a radically different work scenario for residents. In traditional (open) surgery, the senior surgeon literally couldn’t do most of the work without constant hands-in-the-patient cooperation from the resident. So residents could learn by sticking to strong “see one, do one, teach one” norms for surgical training.

This broke down in robotic surgery. Residents were stuck either “sucking” at the bedside – using a laparoscopic tool to remove smoke and fluids from the patient – or sitting in a second trainee console, watching the surgical action and waiting for a chance to operate.

In either case, surgeons didn’t need residents’ help, so they granted residents a lot less practice operating than they did in open procedures. The practice residents did get was lower-quality because surgeons “helicopter taught” – giving frequent and very public feedback to residents at the console and intermittently taking control of the robot away from them.

As one resident said: “If you’re on the robot and [control is] taken away, it’s completely taken away and you’re just left to think about exactly what you did wrong, like a kid sitting in the corner with a dunce cap. Whereas in open surgery, you’re still working.”

Shadow learning

Very few residents overcame these barriers to effectively learn how to perform this kind of surgery. The rest struggled – yet all were legally and professionally empowered to perform robotic surgeries when they finished their residencies.

Successful learners made progress through three norm-bending practices. Some focused on robotic surgery in the midst of medical school at the expense of generalist medical training. Others practiced extensively via simulators and watched recorded surgeries on YouTube when learning in real procedures was prized. Many learned through undersupervised struggle – performing robotic surgical work close to the edge of their capacity with little expert supervision.

Put together, I called these practices “shadow learning,” because they ran counter to norms and residents engaged in them out of the limelight. Also, none of this was openly discussed, let alone punished or forbidden.

Shadow learning came at a serious cost to successful residents, their peers and their profession. Shadow learners became hyperspecialized in robotic surgery, but most were destined for jobs that required generalist skills. They learned at the expense of their struggling peers, because they got more “console time” when senior surgeons saw they could operate well. The profession has been slow to adapt to all this practically invisible trouble. And these dynamics have restricted the supply of expert robotic surgeons.

As one senior surgeon told me, robotics has had an “opposite effect” on learning. Surgeons from top programs are graduating without sufficient skill with robotic tools, he said. “I mean these guys can’t do it. They haven’t had any experience doing it. They watched it happen. Watching a movie doesn’t make you an actor, you know what I’m saying?”

The working world

These insights are relevant for surgery, but can also help us all think more clearly about the implications of AI and robotics for the broader world of work. Businesses are buying robots and AI technologies at a breakneck pace, based on the promise of improved productivity and the threat of being left behind.

Early on, journalists, social scientists and politicians focused on how these technologies would destroy or create jobs. These are important issues, but the global conversation has recently turned to a much bigger one: job change. According to one analysis from McKinsey, 30 percent of the tasks in the average U.S. job could soon be profitably automated.

It’s often costly – in dollars, time and errors – to allow trainees to work with experts. In our quest for productivity, we are deploying many technologies and techniques that make trainee involvement optional. Wherever we do this, shadow learning may become more prevalent, with similar, troubling implications: a shrinking, hyperspecialized minority; a majority that is losing the skill to do the work effectively; and organizations that don’t know how learning is actually happening.

If we’re not careful, we may unwittingly improve our way out of the skill we need to meet the needs of a changing world.

Author Bio: Matt Beane is a Project Scientist at the University of California, Santa Barbara