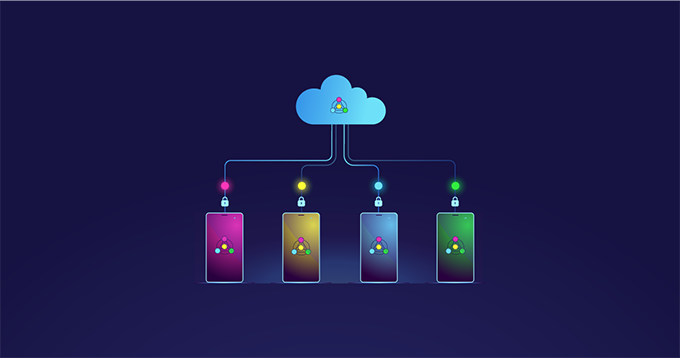

● Federated learning allows AI to learn without sending personal data to the server.

● This technology maintains user privacy while still increasing the intelligence of the system.

● Federated learning is a middle ground for AI advancement and data protection.

Have you ever been amazed when your phone’s keyboard can guess the words you’re about to type? Or when a health app gives you highly personalized recommendations?

Behind that intelligence lies artificial intelligence (AI) that continuously learns from our habits. The problem is, conventional methods of training AI require sending our data to central servers owned by technology companies.

Imagine all our messages, search history, and habits gathered in one place. It would be like forcing all students to move to one giant school: wasteful, complicated, and prone to leaks.

The good news is, there’s another, safer way: federated learning .

Teachers who come to the house

Federated learning is changing the way AI learns. While the old method required our data to “go” to a server, this new approach sends AI algorithms directly to our devices.

The analogy is simple. The old method was like having all patients come to one central hospital. The new method is like having a doctor visit each patient’s home.

In federated learning , the AI learns directly on our phones or computers. Once completed, the AI only sends a “learning summary” to the server, not the raw data.

Our photos, messages, and habits are kept securely stored on our own devices.

Already used by Google

Federated learning isn’t just theory. Google has been implementing it since 2017 for its Gboard keyboard on Android phones.

As we type, Gboard learns the word patterns we frequently use. This learning process occurs on our phone. It only sends general patterns to Google, not the actual content of our typing.

Apple also uses a similar approach for Siri and the QuickType keyboard on the iPhone. They call it differential privacy combined with local processing.

Maintaining medical confidentiality

Federated learning has enormous potential in healthcare. Medical AI research has been hampered by privacy regulations that prohibit the sharing of patient data between hospitals.

With federated learning , researchers can train AI using data from multiple hospitals without moving medical records into one place.

A study in the journal Nature demonstrated that this technique successfully detected brain tumors using data from medical institutions in several countries. Patient data remained secure at each hospital.

This opens up opportunities for cross-border research without violating privacy regulations such as the European Union’s data protection regulation (GDPR) .

Not without challenges

Federated learning isn’t a perfect solution. Because the learning process occurs on the user’s device, our phones have to work harder.

Recent research shows that federated learning can drain battery power and requires a stable internet connection. Cheap devices with weak processors may struggle to handle this process.

There are also security challenges. Even if the original data isn’t transmitted, the learned patterns could still leak sensitive information if not properly protected.

Researchers continue to develop additional techniques, such as secure aggregation and differential privacy to close this gap.

Secure aggregation is a cryptographic technique that allows a server to combine model updates from multiple clients without being able to see each client’s individual updates. A 2022 study showed that this technique can protect user privacy while maintaining communication efficiency, even when multiple users are using the AI.

Meanwhile, differential privacy provides a mathematical guarantee that the model’s output will not change significantly even if a single individual’s data is added or removed. This technique adds ” noise ” to the AI learning process. It’s like mixing background noise into a meeting room: we can hear the general conclusion, but we can’t identify individual voices.

A 2022 study showed that combining differential privacy with secure aggregation can provide double protection: the server does not see individual updates , and the data processing results are also protected from in-depth statistical analysis.

A promising middle ground

Amid global concerns about privacy and data misuse by big tech companies, federated learning offers hope.

We don’t have to choose between “using AI but losing privacy” or “preserving privacy but dumbing down technology.” There’s a middle ground.

Of course, there’s still a lot of work to be done. However, the direction of development is clear: future AI must be intelligent without having to snoop on our personal data.

Author Bio: Rachmad Andri Atmoko is Head of the Internet of Things & Human Centered Design Laboratory at Brawijaya University