In March 2022, approximately a month after Russia invaded Ukraine, a video of Volodymyr Zelensky, President of Ukraine, was broadcast on a Ukrainian national channel. In this video, the president asks his people to surrender their weapons and return to their families. This video was quickly identified as a deepfake due to its low quality and will therefore have had little impact on the fights, but this example perfectly illustrates the dangers that deepfakes can pose.

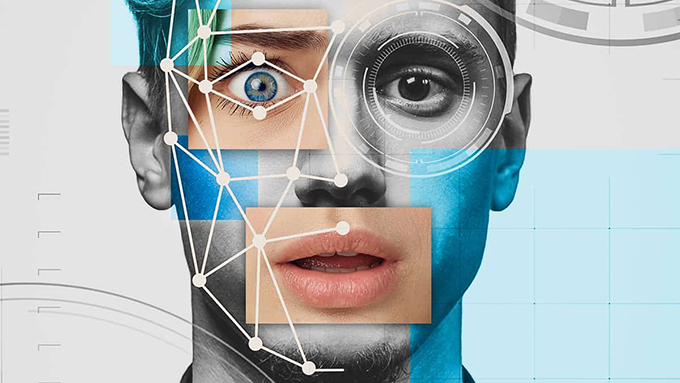

A deepfake is a video in which the face or expression of an individual has been deliberately modified, in order to alter their identity or their words. Techniques for modifying facial expressions are not new, since there is, for example, a scientific article from 1999 using 3D models to reconstruct and modify faces. Deepfakes have many legitimate applications, for example in cinema , advertising and even more recently video compression .

However, supported by the rise of deep learning (a branch of artificial intelligence from which the word deepfake takes its name), models for generating mainstream deepfakes have been developed. These templates allow anyone to create good quality deepfakes for free and thus impersonate any identity in videos. Today, deepfakes are used in particular in scams in which the identity of trusted people is usurped, pushing victims to make transfers thinking they know the person they are talking to.

If, a few years ago, a video could still be considered authentic, this is no longer the case today. We can also ask ourselves the question: can we automatically detect these fake videos using artificial intelligence… when they are themselves generated by AI?

How do we moderate deepfakes on social networks?

Moderation of deepfake videos on social networks is a complicated subject that requires the development of new tools.

Generally, video moderation filters violent or hateful content using artificial intelligence models specifically trained to detect this type of content. However, in the case of a deepfake, the video may appear completely harmless and therefore cannot be detected by this type of model. For example, a video of Volodymyr Zelensky calling on Ukrainians to capitulate appears to have nothing to justify deletion; this only becomes a problem when we know that it is a deepfake.

In cases where artificial intelligence models are not capable of moderating content, social networks rely on their users or human moderators to filter content. But again, this kind of moderation cannot translate to deepfakes, since humans are not capable of detecting deepfakes with great precision .

As moderation tools are not suitable for combating deepfakes, new tools had to be developed, justifying the emergence of a new area of research: automatic deepfake detection .

Passive detectors

Detectors are usually also based on deep learning and can be passive or active.

The objective of a passive detector is to predict whether or not an image has been modified without knowing its origin. Such a detector can be used by a social network user, for example, to test the legitimacy of a video in case of doubt.

To do this, the detector will use characteristics judged to be discriminating, that is to say which make it possible to easily distinguish deepfakes from original images. For example, noticing that the first deepfakes never blinked, researchers proposed counting the frequency of blinks . Below a certain threshold, videos were then labeled as deepfakes.

Unfortunately, generation models have improved since then, and this type of method is no longer effective, which has pushed researchers to develop new techniques. Thus, most recent techniques do not use expert knowledge, i.e. human-chosen features, but rather train deep learning models , using large databases containing videos labeled “real” or “fake”, to find their own discriminating characteristics.

The major problem with these techniques is that they are only effective for detecting deepfakes generated with the methods used to constitute the training database: they therefore do not generalize well to “new” methods of generating deepfakes. . This problem is recurrent with deep learning models , although recent proposals are starting to provide solutions.

Active detectors: protecting the image before it is hijacked

Unlike a passive detector, an active detector protects the original image before it is modified. This type of detector is much less popular than passive detectors, but could notably allow journalists to protect their images and thus prevent them from being used for disinformation purposes.

A first method to protect an image consists of adding a watermark, that is to say a hidden message, which can then be extracted from the image. This message can for example contain information on the original content of the video , which makes it possible to compare the current content of the image with the hidden one. In the case of a deepfake, these two pieces of information should not match.

Another active detection method is to apply an “adversarial attack” on the image to prevent the creation of deepfakes. An adversarial attack is an imperceptible disturbance of an image, similar to a watermark, which pushes a deep learning model into error.

For example, an adversary attack added to a “stop” road sign could disrupt a deep learning model which would not see a stop sign, but for example a limitation of 130 km/h .

In the case of deepfakes, an adversary attack can disrupt the deepfake generator and thus prevent it from producing a quality result.

The problem with active detection methods is that they modify the image and therefore deteriorate its quality, since the modifications applied to the pixels are not natural. Additionally, because hidden messages are low intensity, they can easily be removed, although removing them requires reducing the image quality even further, reducing the chances of the deepfake being mistaken for a real image.

The cat and the Mouse

The problem of detecting deepfakes is still unsolved today and unfortunately may never be completely solved. Indeed, the fields of detection and generation of deepfakes are constantly adapting to each other’s innovations, with an obvious advantage for the generation which is always one step ahead of detection.

This observation does not mean that investing in detection is a bad idea. The deepfakes available on the Internet are rarely from the latest generation models and are possibly detectable. In the event that a recent generation model were to be used, the best weapon will probably remain to verify the information conveyed by the video using different sources.

Author Bio: Nicolas Beuve is a PhD student in automatic deepfake video detection at INSA Rennes