There are many claims to sort through in the current era of ubiquitous artificial intelligence (AI) products, especially generative AI ones based on large language models or LLMs, such as ChatGPT, Copilot, Gemini and many, many others.

AI will change the world. AI will bring “astounding triumphs”. AI is overhyped, and the bubble is about to burst. AI will soon surpass human capabilities, and this “superintelligent” AI will kill us all.

If that last statement made you sit up and take notice, you’re not alone. The “godfather of AI”, computer scientist and Nobel laureate Geoffrey Hinton, has said there’s a 10–20% chance AI will lead to human extinction within the next three decades. An unsettling thought – but there’s no consensus if and how that might happen.

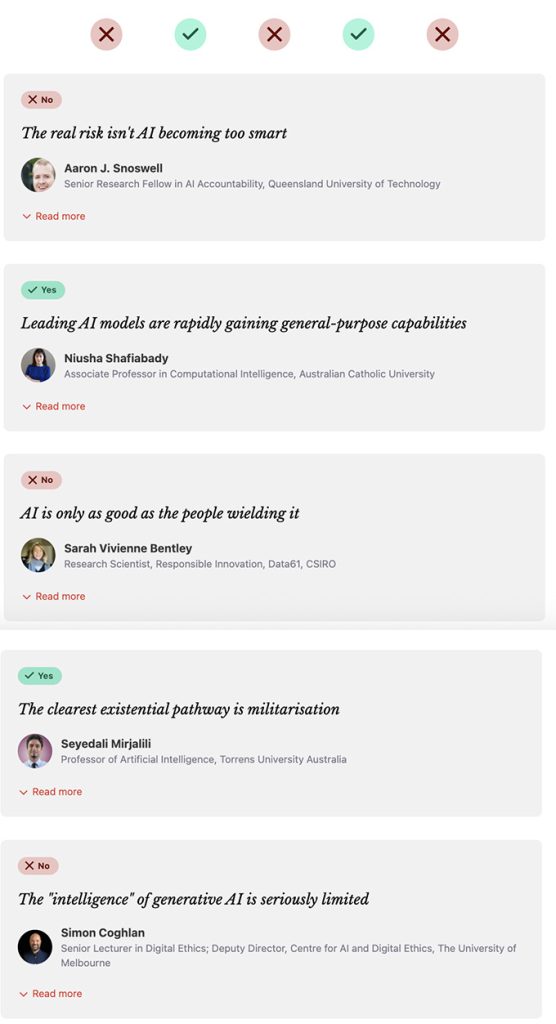

So we asked five experts: does AI pose an existential risk?

Three out of five said no. Here are their detailed answers.

Author Bios: Aaron J. Snoswell is a Senior Research Fellow in AI Accountability at the Queensland University of Technology, Niusha Shafiabady is Associate Professor in Computational Intelligence at the Australian Catholic University, Sarah Vivienne Bentley is a Research Scientist, Responsible Innovation, Data61 at CSIRO, Seyedali Mirjalili is Professor of Artificial Intelligence, Faculty of Business and Hospitality at Torrens University Australia and Simon Coghlan is Senior Lecturer in Digital Ethics; Deputy Director, Centre for AI and Digital Ethics at The University of Melbourne