The emergence of so-called large language models, such as ChatGPT , has revived long-standing academic debates . What characteristics must an AI system possess to be considered truly intelligent ? Should it have common sense ? Should it be autonomous? Should it take into account the consequences of its actions?

Answering these questions is crucial to understanding where we are in the challenge of building intelligent machines. It is also crucial to giving or taking away credibility from those who announce the imminent arrival of general artificial intelligence .

Keys to making a good decision

There is broad consensus in the scientific community that the ability to predict the consequences of one’s actions is essential for any system, biological or artificial, to be considered intelligent.

Without this ability, its decisions would lack direction and purpose. Its responses would be reactive, with no analysis or foresight into long-term effects. The system would not be able to adapt well to its environment or correct its errors. This would severely limit its functionality and its ability to interact effectively with the world.

A good example is the case of the autonomous car . This vehicle will face situations in which it must choose between several actions, such as braking suddenly or avoiding an obstacle.

When making your decision, you may consider that braking would prevent an accident. However, you must also consider the risk of causing another accident, such as a rear-end collision. If you do not consider this risk, braking could have worse consequences than failing to avoid the obstacle.

Current AI systems use models that analyze scenarios based on data and past behavior. However, they work primarily with correlations. This means that they identify useful patterns, but do not understand the cause-and-effect relationship. Correlations are useful for modeling common situations, but they are not sufficient to analyze all the consequences of an action in a given scenario.

This is where causality comes in . While correlation indicates that two events occur together, causality describes how one action (the cause) produces an outcome (the consequence). For a machine to truly take into account the consequences of its actions, it’s not enough to simply recognize patterns—it must understand which action triggers which outcome.

Correlation vs. causality

If we observe that people who drink more coffee have more insomnia, that does not imply that coffee causes the lack of sleep. Perhaps those who suffer from insomnia drink more coffee to stay awake. Or perhaps stress could influence both factors. The correlation can also be a coincidence or a pattern without a direct causal relationship. Sometimes, data show apparent connections without a real causal basis.

To deduce that coffee really causes insomnia, controlled experiments are needed. For example, we could randomly divide a group of people into two groups, one of whom drinks coffee and the other does not. We can then observe the differences in their sleep patterns, making sure to control for other variables such as stress, diet and lifestyle.

Another option would be to use advanced data analysis techniques based on causal inference , which can help isolate the effect of coffee from other potentially confounding factors.

Providing machines with causal knowledge

Current AI systems, based on the analysis of observational correlations and lacking causal reasoning capabilities, are far from having the capacity to decide for themselves which events are a consequence of their actions. Therefore, the only option for them to take this into account is to provide them with a model of the world that explicitly includes all those causal relationships known to humans.

This presents several difficulties, covering technical as well as philosophical and practical aspects. Causal models are complex, dynamic and often ambiguous.

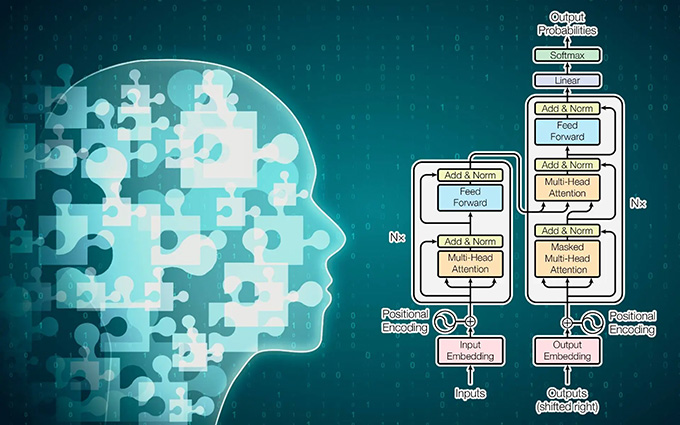

Creating a system capable of handling these complexities therefore requires significant advances in AI design and in our understanding of the world. But very recently, a middle path has been proposed that may alleviate some of these problems: the use of large language models .

This idea may seem paradoxical. Can we use a correlation-based system to understand causal relationships? The question becomes clearer when we think about how humans acquire causal knowledge: through explanation.

Some of our causal knowledge comes from small everyday experiments, and some may be written into our genome. But most of it has been explained to us by other people: parents, friends, teachers, etc. In short, explanation through language is one of the main ways to acquire this kind of knowledge.

Large language models have been trained on many documents describing causal relationships and thus represent a vast (and not unproblematic) repository of causal knowledge that could be tapped. Will this be a fruitful avenue? We don’t know yet and it may take time to find out, but it opens up a possible solution for equipping machines with knowledge about the consequences of their actions.

PS: A proposal for an experiment with no scientific value

With the following prompt we can test the “causal knowledge” of a large language model. We can also experiment with other prompts in different scenarios and evaluate what percentage of correctness they have.

You are a business advisor and give clear, well-founded, but brief (5-15 lines) advice in response to questions about what people should do.

QUESTION: A toy store owner wants to decide whether the ad they used in early December is really better than their previous ads. Here are their sales data: October: €10,200; November: €10,000; December: €13,000; January: €10,100. They now want to decide which ad to show in February. Can you help them assess whether the increase in sales in December was due to the ads? Keep in mind that the new ad costs €1,000 more, so the toy manufacturer is interested in maximizing profits.

If we do the test, it will most likely propose an answer that would be aligned with the causal structure of the scenario (what elements are important and what cause-effect relationship they have) that we have proposed.

This does not mean that it knows how to reason causally – it does not do so because it sometimes makes mistakes that are incompatible with this ability – but it does show that it has captured this causal structure of the documents with which it has been trained.

Author Bio: Jordi Vitria is Professor of Computer Science at the University of Barcelona