Artificial intelligence (AI) has provided unlimited access to information in a short time, thus providing convenience in terms of education. With AI, students can collect various secondary information needed for assignments in a faster time than manual article searches .

However, AI can also cause several problems, such as the leakage of personal data , technological bias in handling human mentality, and a lack of originality of work if students rely entirely on AI in completing assignments.

This condition requires strengthening ethics to prevent the misuse of AI which has fatal consequences for the psyche and intellectual growth of students. Strengthening ethics should not only focus on technology, but also hone morals on the importance of humanism in the use of technology.

AI and data leaks

AI-using students are vulnerable to data leaks, because they have different literacy in protecting their personal data . Students who have high literacy and concern for data protection will certainly read more details regarding the data that is distributed and kept confidential in certain AI platforms.

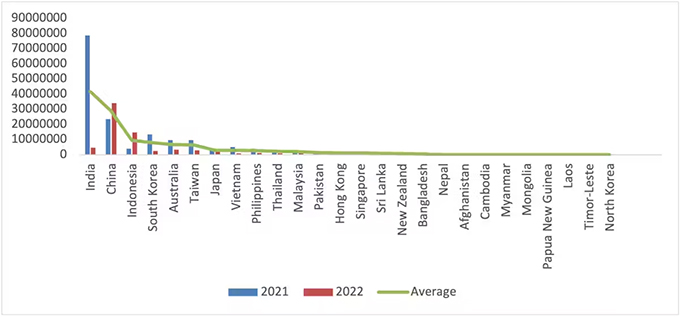

Intensity of Online Data Breach in Asia Pacific 2021 – 2022. Source: https://www.statista.com/. Author provided.

However, those who have low literacy will be vulnerable to becoming victims of data misuse by irresponsible parties. In general, Indonesia is ranked 3rd in terms of data leaks from all countries in the Asia Pacific region during 2021 and 2022.

AI as a mental health consultant

In addition, many school-age teenagers or college students use AI chatbots to provide solutions to the psychological problems they are facing.

The problem is, the reliability of AI’s answers to psychological problems is still questionable. In psychiatric research, AI replaces the role of humans (dehumanization) in providing psychological consultations. This means that there is a human component that is missing, namely empathy and also understanding of the patient’s feelings. This causes bias towards the response to human psychological problems.

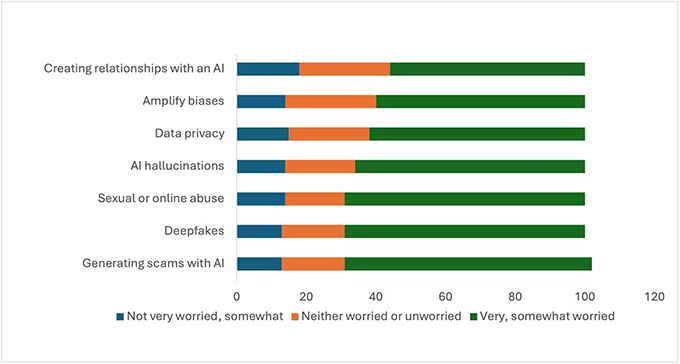

Generative Artificial Intelligence Issues 2023 in the World. Source: Microsoft Global Online Safety Survey 2024. Author provided.

Data from the Global Online Safety Survey 2024 shows that high dependence on AI brings problems of false beliefs and disrupts students’ mental and psychological conditions such as hallucinations ( AI hallucinations ) and fake personalities ( deepfakes ).

AI reduces the authenticity of work

High dependence on AI can also reduce the originality of work because it does not encourage students to think critically about a problem. In a study conducted by Columbia University in the United States (US), one of the human abilities that cannot be imitated by AI is human empathy, creativity and motivation.

In classroom teaching, final assignments in the form of presentations, making field observation videos to making papers, are one of the achievements to encourage motivation, critical thinking skills and also student creativity. Because, group presentations can foster the value of cooperation, creativity and also critical thinking skills .

However, by relying heavily on AI, their ability to collaborate and think critically can be reduced. After all, AI has limitations in supporting a proportional learning process to train critical thinking. Users can order AI to compose and create the presentation they want, but the originality of their thinking and collaboration is minimal.

The same thing also happens in making papers. This learning model is expected to train research skills, process information and also critical thinking skills . However, with the presence of AI-based chatbots , students can make papers in just a few minutes .

How to strengthen ethical aspects in the use of AI

Given the various risks above, strengthening the ethical aspects in the use of AI becomes substantial in higher education teaching in Indonesia. First , related to data protection, universities need to provide education and awareness as early as possible to prevent data leaks from the use of AI.

This can be done through the creation of videos, guidebooks, and even word-of-mouth campaigns regarding personal data protection in the digital world. In Germany and South Africa, for example, universities have made it mandatory for students to protect their personal data if the teacher tests them with AI-based technology.

Universities also need to realize that robots have limitations in dealing with their students’ mental health problems. In the world of health, mental health problems still require human treatment (eg psychologists), because there are issues of empathy and emotion that are more optimal if handled by humans.

Instead of providing a diagnosis, AI can help with administrative tasks such as patient medical records. This can help with the accuracy of administration and recording of patient medical records .

Regarding the authenticity of the work, academic integrity must be the main value. Every student who uses AI to complete assignments needs to mention this in their assignments ( disclaimer ) . In addition, AI users still need to read and include references to the author of the article from the sentence quoted by AI. So, AI is only a tool, not the main actor in completing assignments.

Author Bio: Albert Hasudungan is a Lecturer at Prasetiya Mulya University