Can artificial intelligence (AI) be manipulated to do the opposite of what it was designed to do? That’s the goal of adversarial learning , a discipline that explores both the vulnerabilities of AI models and how to defend against them. From bypassing a spam filter to evading facial recognition, examples abound.

But what are these attacks targeting AI models, and how do they work? Let’s explore the behind the scenes.

Spam bypass is one of the simplest and oldest examples of adversarial learning. To avoid having their messages automatically deleted, spammers will hide suspicious words in their texts by distorting them through spelling mistakes or special characters (“cadeau” would become “ƈąɗẹąս”). Thus, by ignoring unknown words, algorithms will only see the “good” words and will miss the questionable terms.

AI circumventions are not only achieved through digital means. For example, there are ingenious clothes specifically designed to thwart facial recognition tools and make themselves somehow invisible, escaping mass surveillance.

To understand adversarial learning, let’s quickly go back to how an AI learns.

It all starts with a dataset that represents examples of the task to be accomplished: to create a spam detector, you need real spam and normal emails. Then a phase is executed where a mathematical model will learn to distinguish them and execute the task. Finally, this model (or AI) is used in production to provide a service.

Each of these three stages of operation—before, during, and after training—is subject to different types of attacks. The training phase is arguably the most difficult part to exploit because of the difficulty in accessing it. Attack scenarios often assume that training is split across multiple machines and that at least one of them is hostile. The attacker sends back erroneous or distorted messages to modify the final behavior of the AI. This is called a Byzantine attack .

During the pre-training phase, data poisoning relies on the idea that all data is trustworthy. However, an attacker could corrupt this data to influence the future results of the AI. For example, it is possible to introduce a backdoor to manipulate the model in particular cases .

These attacks do not necessarily require sophisticated technical tools. Recommendation systems are particularly sensitive to them, because they depend heavily on user data and behavior. The proliferation of malicious bots on social networks can thus influence suggested content and even impact election results .

Finally, the last type of attack occurs after training and includes evasion attacks that exploit flaws in a model. Spam bypass, seen above, is one example.

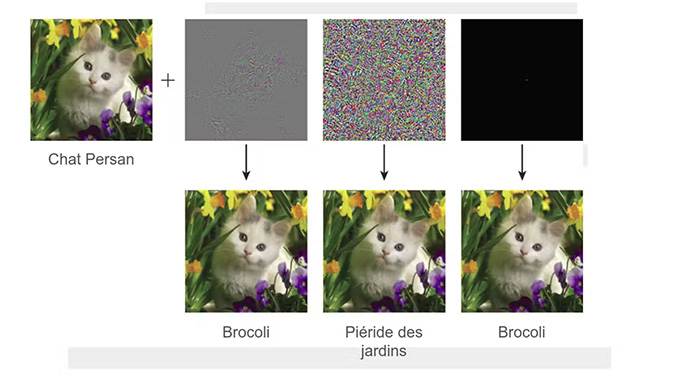

There are different techniques to learn how to perturb the inputs of an AI in order to make it predict what we want. For example, it is possible to introduce a deformation into an image that is imperceptible to humans, but blinds an AI. This raises debates on the safety of autonomous vehicles that would no longer be able to read road signs correctly.

Another threat after training is model extraction . This involves extracting the data on which an AI was trained, either to copy it or, more seriously, to retrieve personal and private information, such as medical information or an address. This is particularly worrying for the user who is not aware of this type of problem and blindly trusts an AI such as ChatGPT.

With every attack comes defense strategies. While models are becoming more reliable, attacks are becoming more complex and difficult to thwart. Knowing this encourages us to be more careful with our personal data and the results of an AI, especially the most invisible ones such as recommendation algorithms

Author Bio: Julien Romero is a Lecturer in Artificial Intelligence at Télécom SudParis – Institut Mines-Télécom